手撕Res-Net残差网络模型

从0写一个ResNet网络,并在最后讲述训练过程

在这篇文章中,我将从头开始实现一个ResNet(残差网络),并展示如何训练这个网络。ResNet是由何凯明等人在2015年提出的,它通过引入残差模块解决了深层神经网络训练困难的问题。

1. 什么是ResNet?

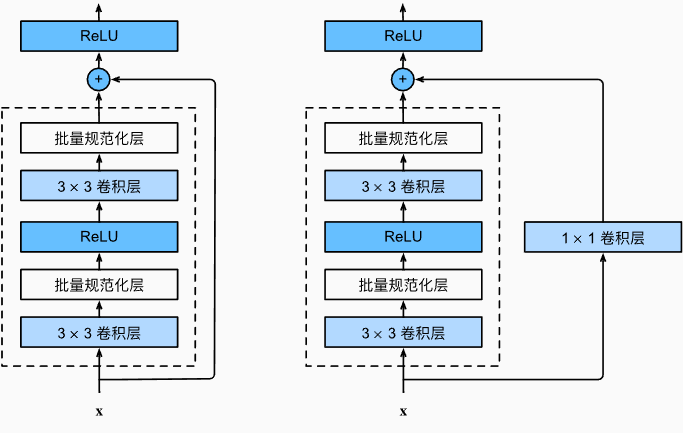

ResNet的核心思想是通过引入“残差块”(Residual Block)来构建深层网络。残差块通过跳跃连接(skip connection)使得梯度可以直接传递,从而缓解了梯度消失问题。

2. 实现ResNet

我们将使用PyTorch来实现ResNet。首先,我们需要定义一个残差块。

import torch

import torch.nn as nn

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride=1, downsample=None):

super(ResidualBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity

out = self.relu(out)

return out

接下来,我们定义ResNet网络结构。

class ResNet(nn.Module):

def __init__(self, block, layers, num_classes=1000):

super(ResNet, self).__init__()

self.in_channels = 64

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

def _make_layer(self, block, out_channels, blocks, stride=1):

downsample = None

if stride != 1 or self.in_channels != out_channels * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channels, out_channels * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(out_channels * block.expansion),

)

layers = []

layers.append(block(self.in_channels, out_channels, stride, downsample))

self.in_channels = out_channels * block.expansion

for _ in range(1, blocks):

layers.append(block(self.in_channels, out_channels))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

我们可以通过以下代码来实例化一个ResNet-18模型:

def resnet18():

return ResNet(ResidualBlock, [2, 2, 2, 2])

3. 训练ResNet

接下来,我们将展示如何训练这个ResNet模型。我们将使用CIFAR-10数据集进行训练。

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

# 数据预处理

transform = transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)),

])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=128, shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=100, shuffle=False, num_workers=2)

# 实例化模型、定义损失函数和优化器

net = resnet18()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.1, momentum=0.9, weight_decay=5e-4)

# 训练模型

for epoch in range(10): # 训练10个epoch

net.train()

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

inputs, labels = data

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i % 100 == 99: # 每100个batch打印一次损失

print(f'[Epoch {epoch + 1}, Batch {i + 1}] loss: {running_loss / 100:.3f}')

running_loss = 0.0

print('Finished Training')

4. 总结

在这篇文章中,我们从头实现了一个ResNet网络,并展示了如何在CIFAR-10数据集上进行训练。通过引入残差块,ResNet能够有效地训练深层神经网络。如果你有任何问题或建议,欢迎在评论区留言。

本文是原创文章,采用 CC BY-NC-ND 4.0 协议,完整转载请注明来自 Enzo

评论

匿名评论

隐私政策

你无需删除空行,直接评论以获取最佳展示效果